Microsoft Fabric - Data Warehouse

Catalog and documentation

Data Dictionary

Dataedo imports the following report objects from Ms Fabric - Data Warehouse:

- Tables

- Views

- Procedures

- Functions

- Dependencies

Descriptions, aliases, and custom fields

When technical metadata is imported users will be able to edit descriptions of each object and element, provide meaningful aliases (titles), and document everything with additional custom fields. Dataedo reads extended properties from the following Ms Fabric - Data Warehouse objects:

- Tables

- Columns

- Views

- Columns

- Procedures

- Parameters

- Functions

- Parameters

Data Profiling

Users will be able to run data profiling for a table or view in the warehouse and then save selected data in the repository. This data will be available from Desktop and Web.

Data Quality

Users will be able to check if data in MS Fabric Warehouse tables is accurate, consistent, complete, and reliable using Data Quality functionality. Data Quality requires SELECT permission over the tested object.

Connection requirements

Permissions

Importing database schema requires a certain access level in the documented database. The user used for importing or updating the schema should at least have "View definition" permission granted on all objects that are to be documented. "Select" also works on tables and views.

To be able to import dependencies, the user needs to have SELECT permission on the sys.sql_expression_dependencies view.

Finding server name

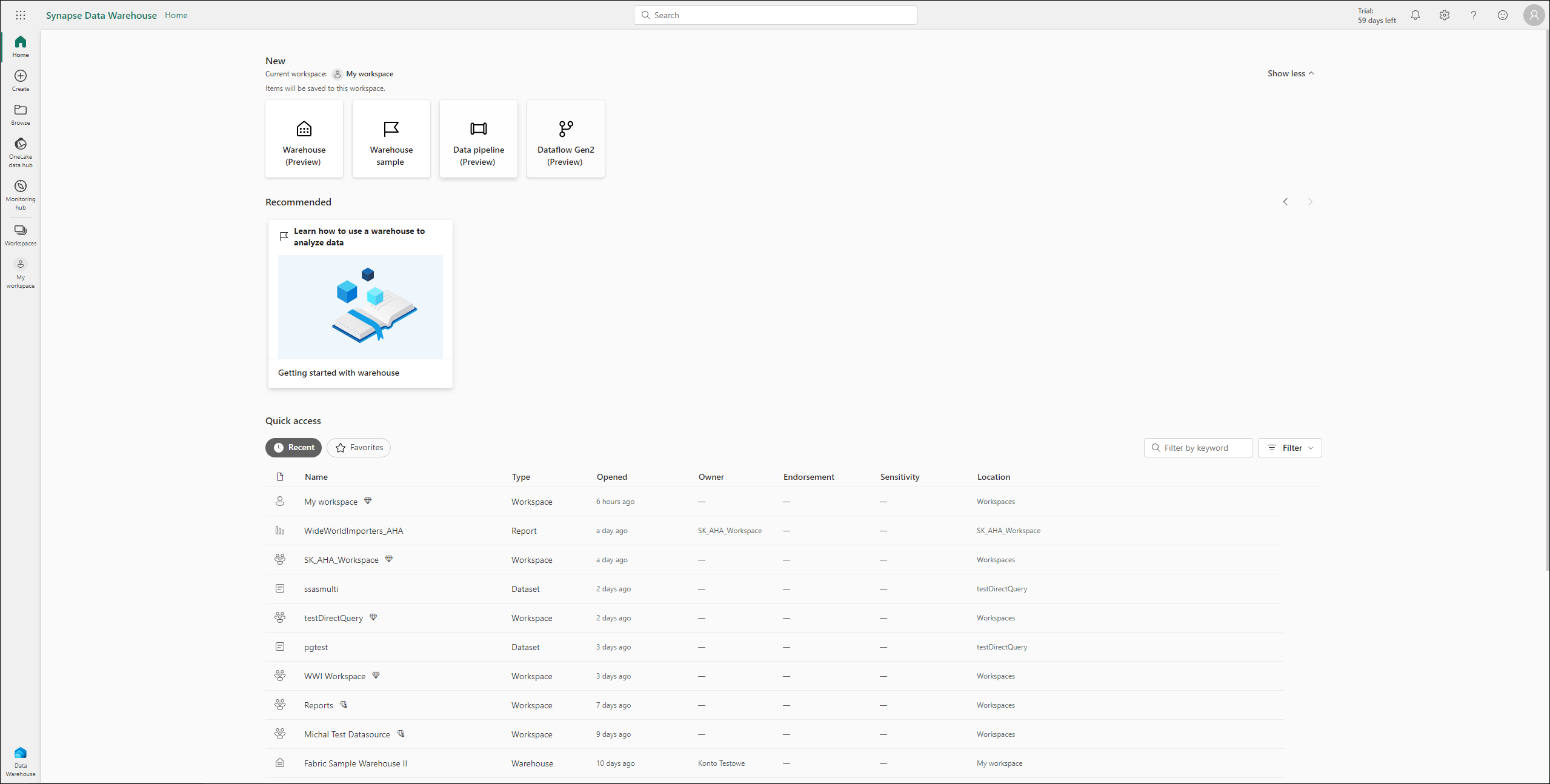

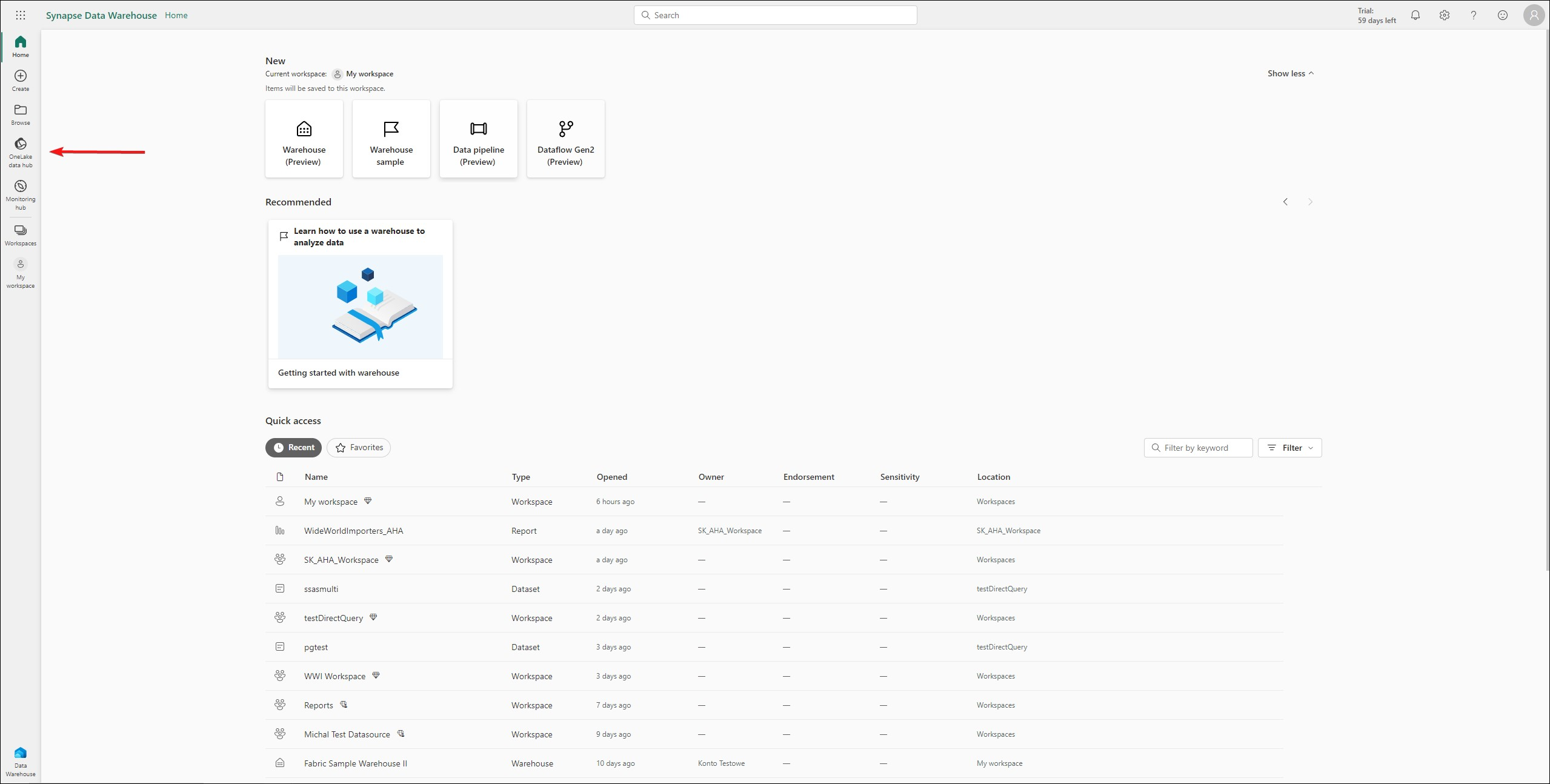

Open your Ms Fabric

On the left side panel choose Onelake Data Hub.

On the object list find the Warehouse you would like to document and click on three dots [...]

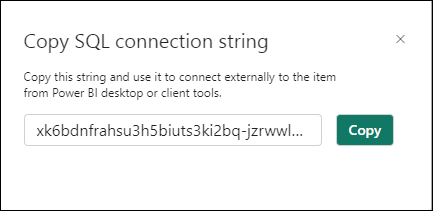

From the context menu pick Copy SQL connection string.

Copy it and paste it as your Server name.

Connecting to Ms Fabric - Data Warehouse

Add new connection

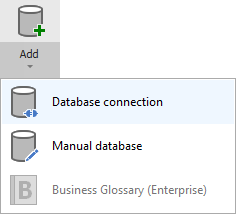

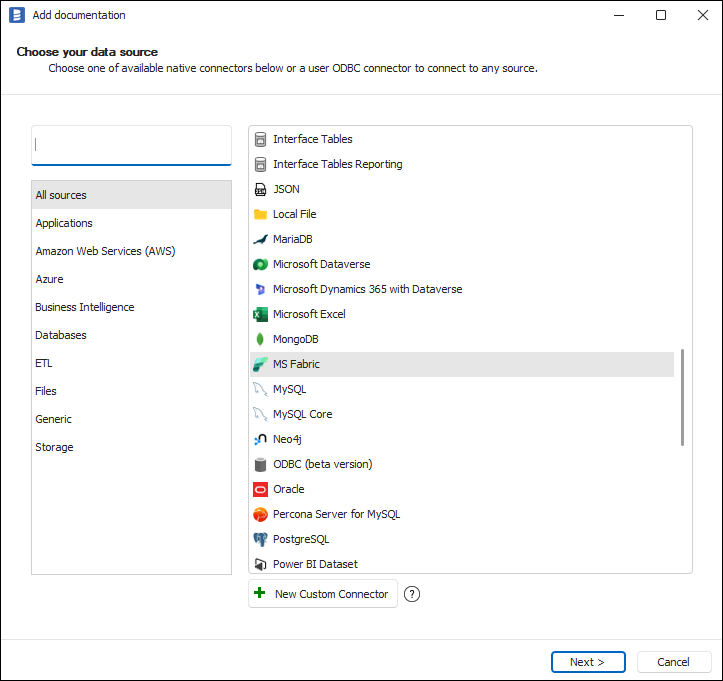

To connect to Ms Fabric - Data Warehouse instance create new documentation by clicking Add and choosing Database connection.

On the connection screen choose MS Fabric.

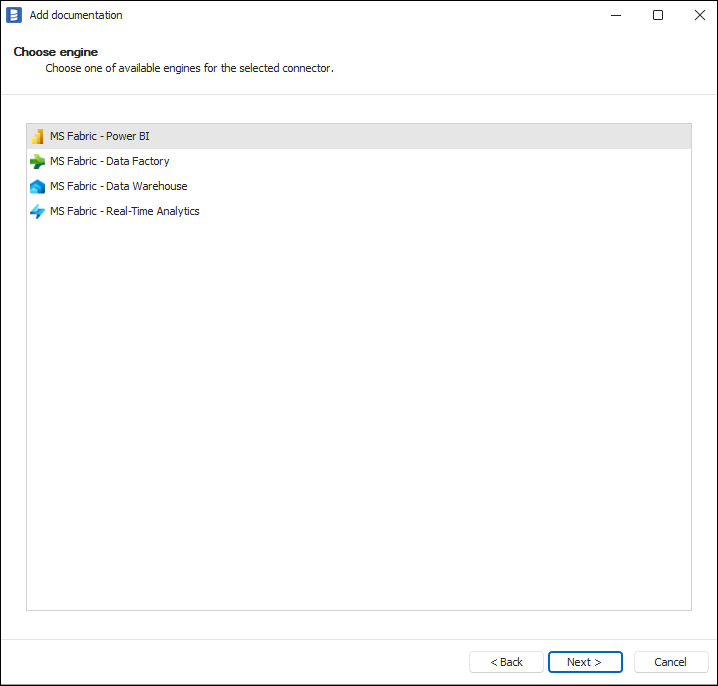

and pick MS Fabric - Data Warehouse

Connection details

Provide connection details:

- Server name - Name of the server you would like to connect to (if you don't know where to find the server name, read the explanation above in Connection requirements)

- Port - Port of the service

- Authentication - Way of authentication to be used when connecting:

- Windows Authentication - Authentication that will use the currently logged-in Windows user. Does not require providing any other information.

- SQL Server Authentication - SQL authentication. Requires SQL login:

- User

- Password

- Microsoft Entra ID - Password - Authentication with Microsoft Entra. Requires:

- User - AD username

- Password - Password for the provided username

- Microsoft Entra ID - Integrated - This authentication will use the AD account currently logged in Windows.

- Microsoft Entra ID - Service Principal - Authentication using Service Principal

- Microsoft Entra ID - Universal with MFA - Authentication through an external Azure AD service. You can optionally provide a username.

- Connection mode - Select the way you want to encrypt data

- Database - Name of the Ms Fabric - Data Warehouse database

Saving password

You can save the password for later connections by checking the Save password option. Passwords are securely stored in the repository database.

Importing schema

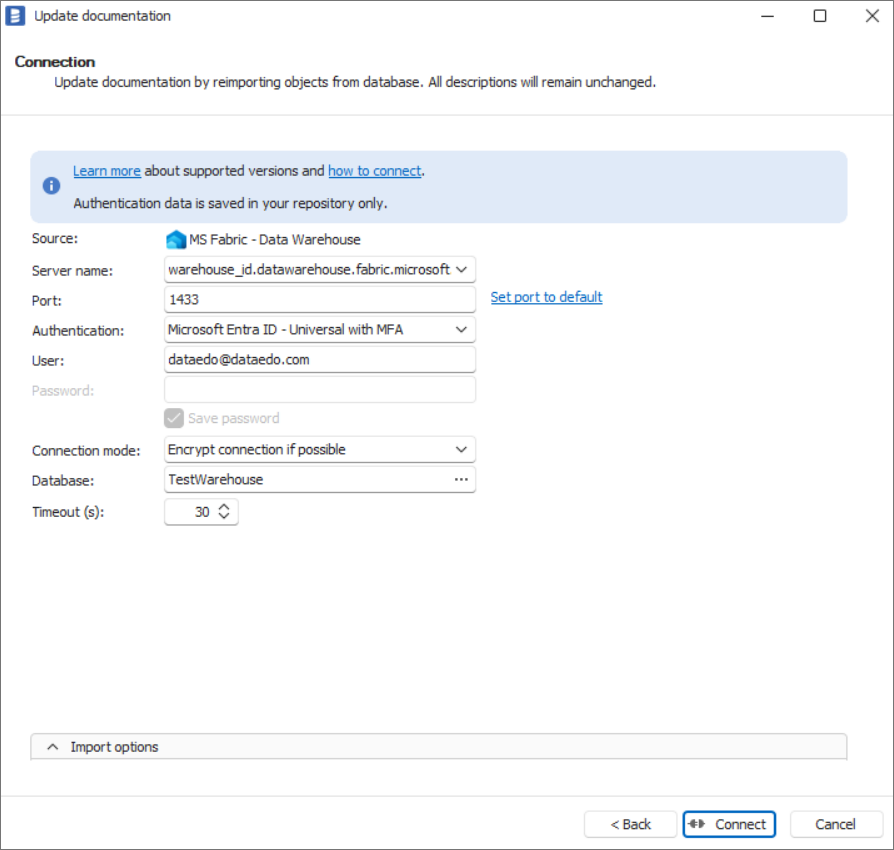

After providing required connection parameters you need to click the Connect button to load workspaces by letting the Dataedo connect to your Ms Fabric Instance.

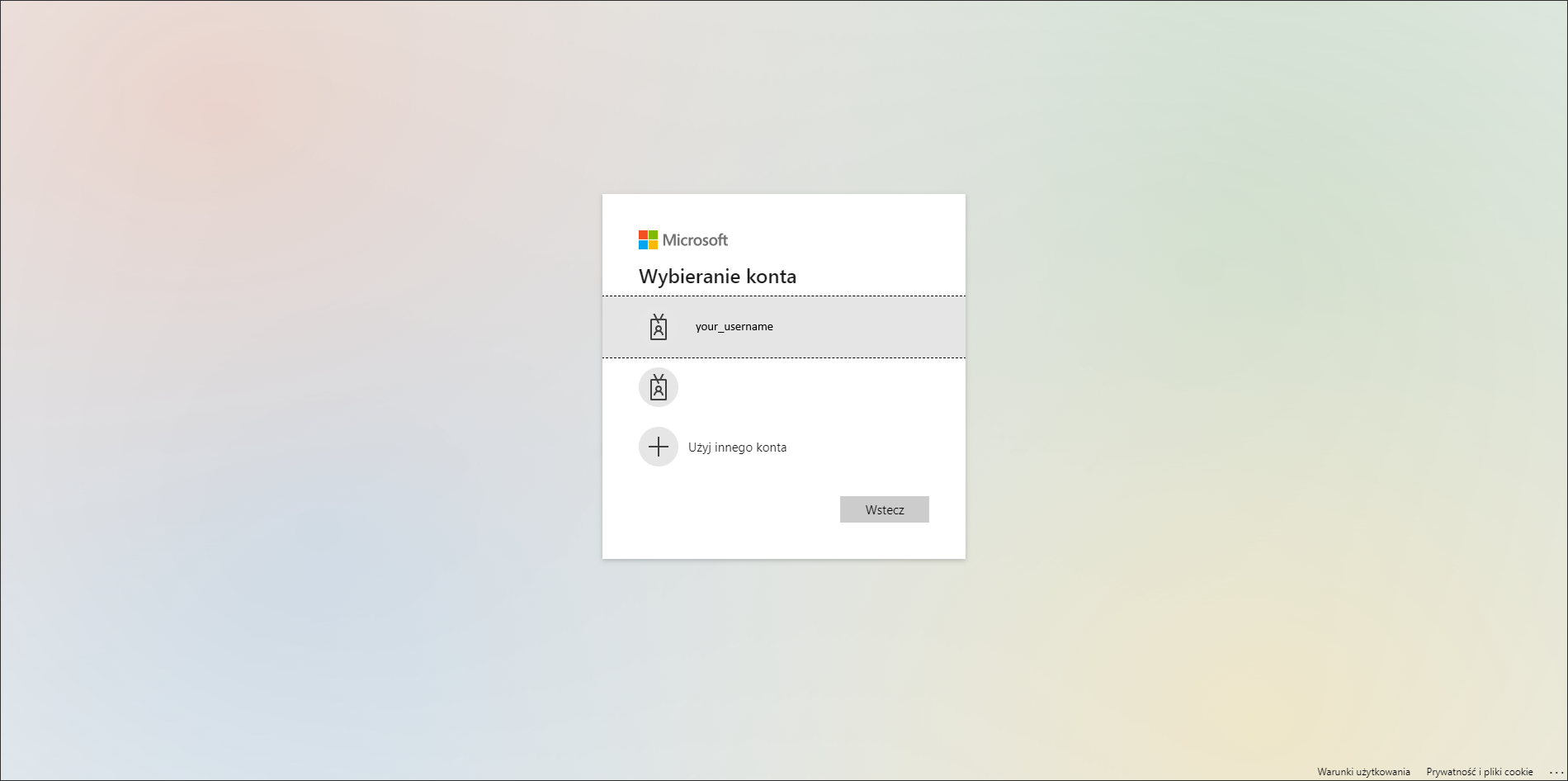

If you choose Microsoft Entra ID - Universal with MFA authentication type, then you need to select the account on the Microsoft authentication screen that will pop up:

If it's your first use of Dataedo, you also need to accept the license agreement and give the required permissions to Dataedo.

When the connection was successful Dataedo will read objects and show a list of objects found. You can choose which objects to import. You can also use an advanced filter to narrow down the list of objects.

Confirm the list of objects to import by clicking Next.

The next screen allows you to change the default name of the documentation under which your schema will be visible in the Dataedo repository.

Click Import to start the import.

When done close the import window with the Finish button.

Your database schema has been imported to new documentation in the repository.

Importing changes

To sync any changes in the schema in Ms Fabric - Data Warehouse and reimport any technical metadata simply choose the Import changes option. You will be asked to connect to Ms Fabric - Data Warehouse again and changes will be synced from the source.

Scheduling imports

You can also schedule metadata updates using command line files. To do it, after creating documentation use the Save update command option. The downloaded file can be run in the command line, which will reimport changes to your documentation.

Specification

Imported metadata

| Imported | Editable | |

|---|---|---|

| Tables & External Tables | ✅ | ✅ |

| Columns | ✅ | ✅ |

| Data types | ✅ | |

| Nullability | ✅ | |

| Description | ✅ | ✅ |

| Identity (is identity on) | ✅ | |

| Default value | ✅ | ✅ |

| Views | ✅ | ✅ |

| Description | ✅ | ✅ |

| Script | ✅ | ✅ |

| Columns | ✅ | |

| Data types | ✅ | |

| Nullability | ✅ | |

| Description | ✅ | ✅ |

| Identity (is identity on) | ✅ | |

| Default value | ✅ | ✅ |

| Procedures | ✅ | ✅ |

| Script | ✅ | ✅ |

| Parameters | ✅ | ✅ |

| Functions | ✅ | ✅ |

| Script | ✅ | ✅ |

| Parameters | ✅ | ✅ |

| Returned value | ✅ | ✅ |

| Dependencies | ✅ | ✅ |

Supported features

| Feature | Imported |

|---|---|

| Import comments | ✅ |

| Write comments back | |

| Data profiling | ✅ |

| Reference data (import lookups) | |

| Importing from DDL | |

| Generating DDL | ✅ |

| FK relationship tester | ✅ |

| Data quality | ✅ |

Comments

Dataedo reads comments from the following Tableau objects:

| Object | Read | Write back |

|---|---|---|

| Tables comments | ✅ | |

| External tables comments | ✅ | |

| Views comments | ✅ |

Data Lineage

| Source | Method | Version |

|---|---|---|

| Tables (column-level) | Metadata API | 23.2 (2023) |

| Views (column-level) | Metadata API | 23.2 (2023) |