Documenting and cataloging Google BigQuery Data Warehouse

Catalog and documentation

Data Dictionary

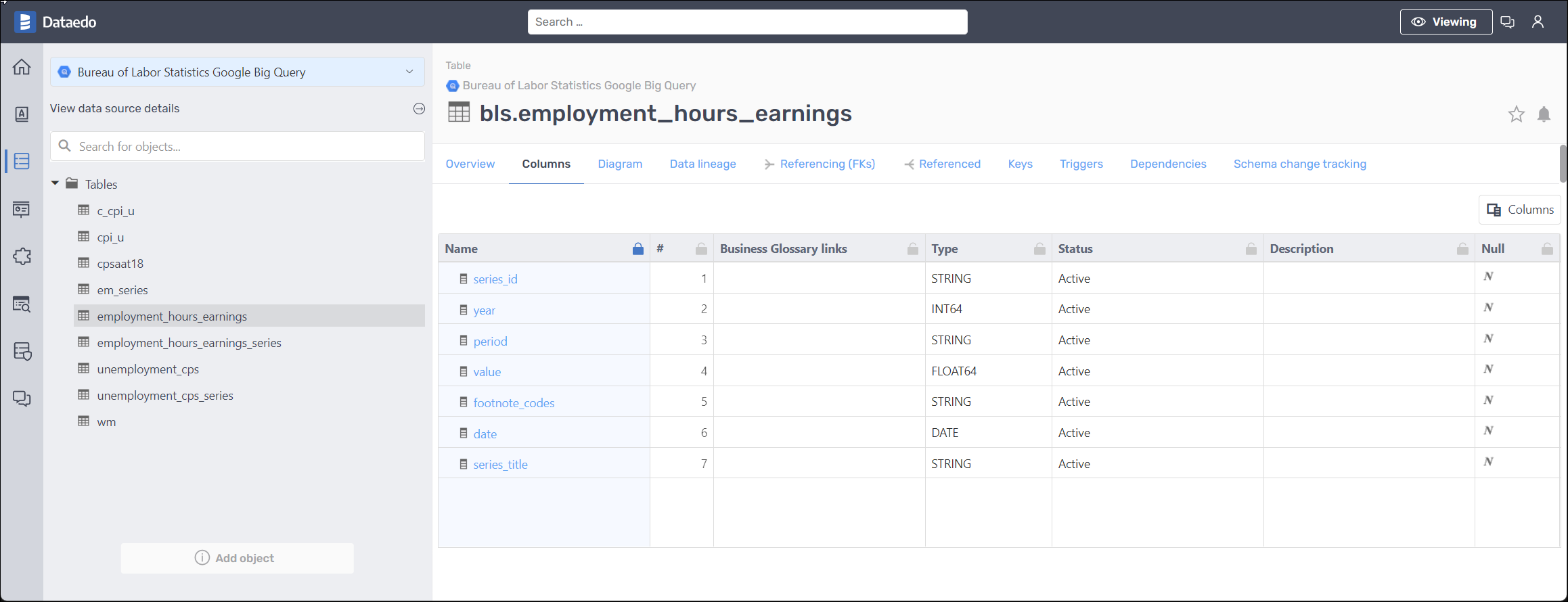

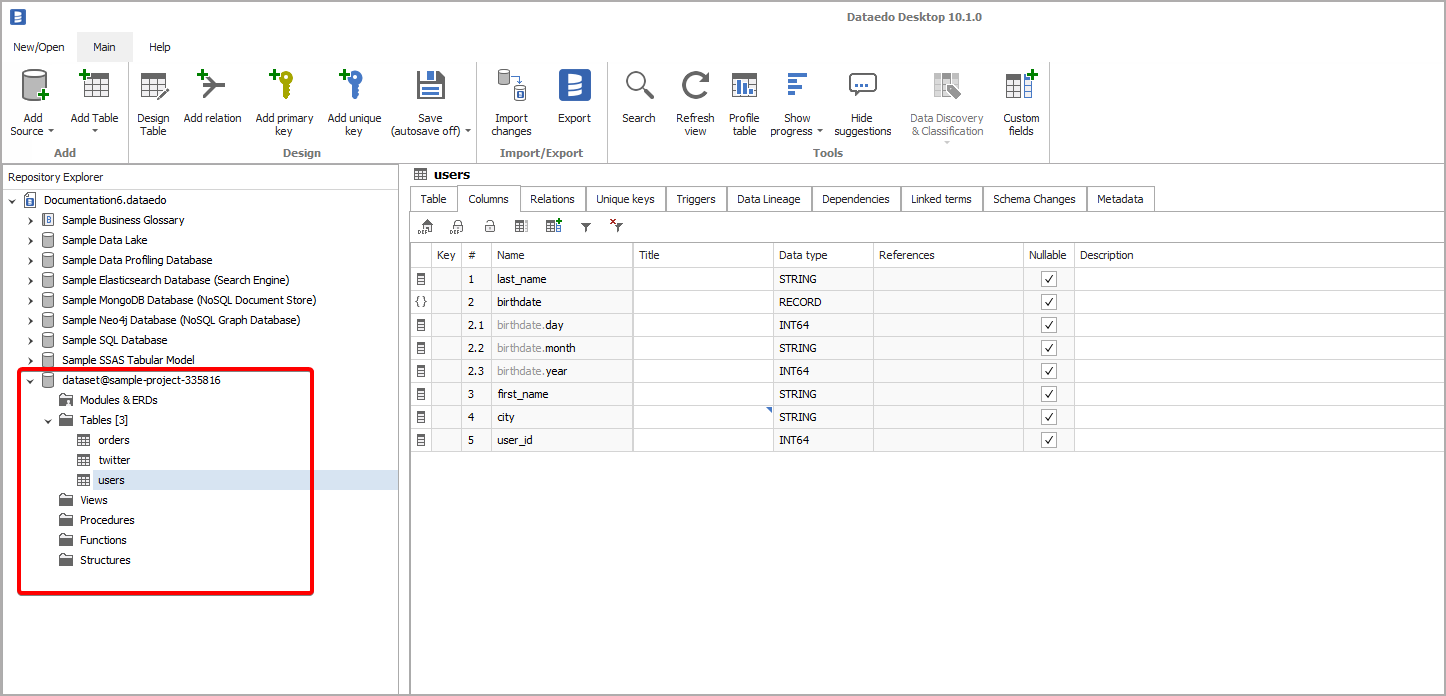

Dataedo imports tables, external tables, views, and their columns.

Stored procedures, user-defined functions

Stored procedures and user-defined functions will be imported with their parameters and code.

Descriptions, aliases, and custom fields

When technical metadata is imported, users will be able to edit descriptions of each object and element, provide meaningful aliases (titles), and document everything with additional custom fields.

Import comments

When importing metadata from Google BigQuery, Dataedo reads table, view, and column comments.

Business Glossary

Users will be able to link a Business Glossary term to any Google BigQuery object.

ER Diagrams

Using manually created foreign keys, Dataedo builds ER diagrams (ERDs) automatically and allows you to create your own manually.

Data Profiling

Users will be able to run data profiling for a table, view, or multiple datasets in the warehouse and then save selected data in the repository. This data will be available from Desktop and Web.

Lookups / Reference data

Users will be able to build Lookups for columns in Google BigQuery tables and views and feed them with distinct values from a column.

Data Classification

Users will be able to run classification on Google BigQuery database in the repository in search of columns containing potentially sensitive data. All built-in functions are supported.

Importing changes and schema change tracking

To import changes from Google BigQuery warehouse and update metadata in Dataedo, simply use the Import changes option. Changes in any Google BigQuery object will be imported and noted in the Schema changes tab.

Description changes

Changes to descriptions in Dataedo Desktop and Web Catalog are tracked and saved in the repository.

Design and generate schema

You will be able to design new tables and columns for your Google BigQuery data warehouse.

Share in Web Catalog or export to HTML, PDF, or Excel

Documentations can be exported by sharing it in the web catalog or generating HTML, PDF, or Excel.

Subject areas

Google BigQuery connector supports Subject Areas.

Prerequisites

Service Account

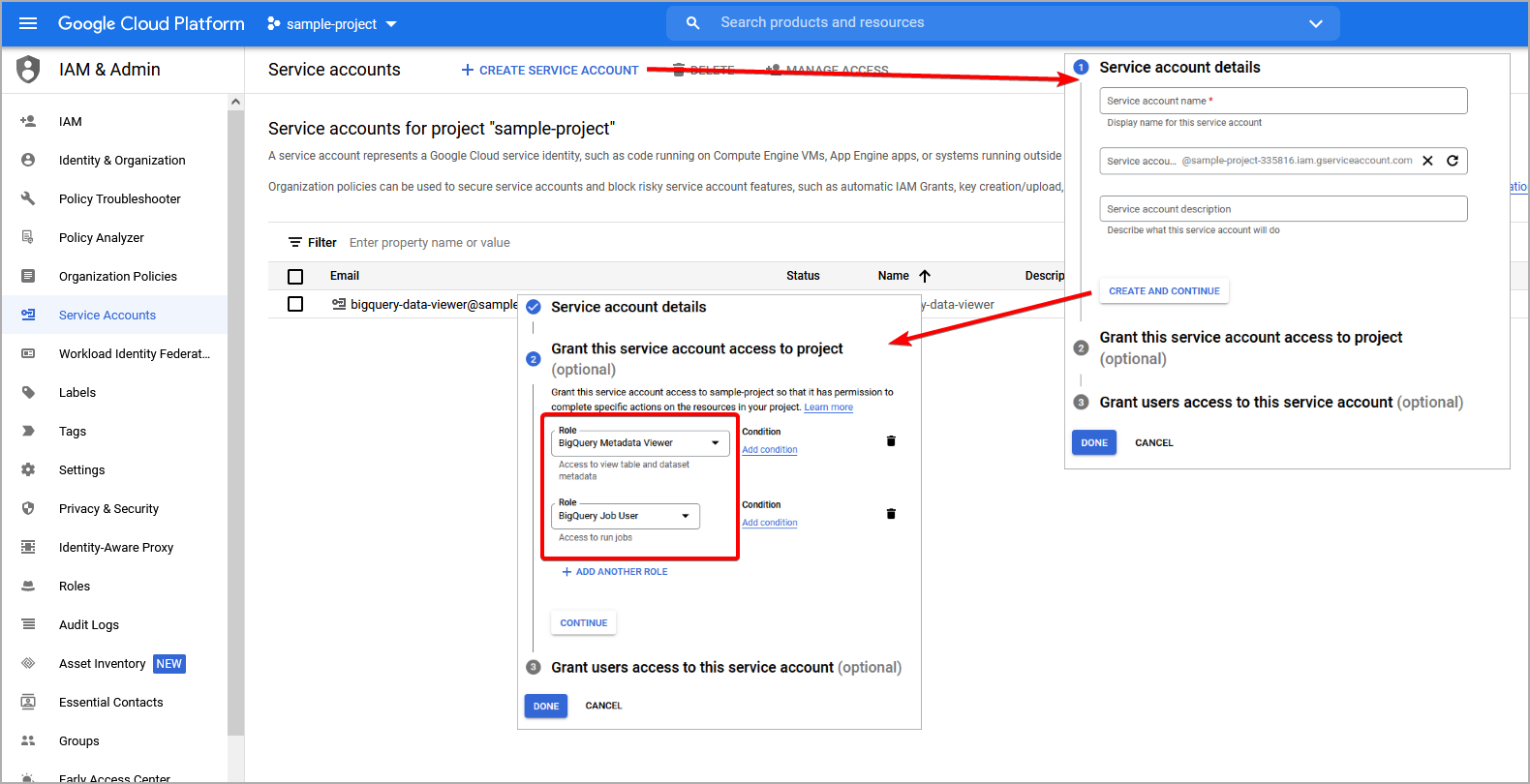

Dataedo connects to Google BigQuery with a Service Account. If you don't have one, please read carefully the following Google Cloud docs:

During the creation (or modification) of the service account, you need to specify roles that will allow Dataedo to document the BigQuery database. Dataedo reads INFORMATION_SCHEMA views to collect metadata, hence minimum permissions (roles) that the service account needs to have are:

-

BigQuery Metadata Viewer - to read metadata,

-

BigQuery Job User - to run a query that reads metadata.

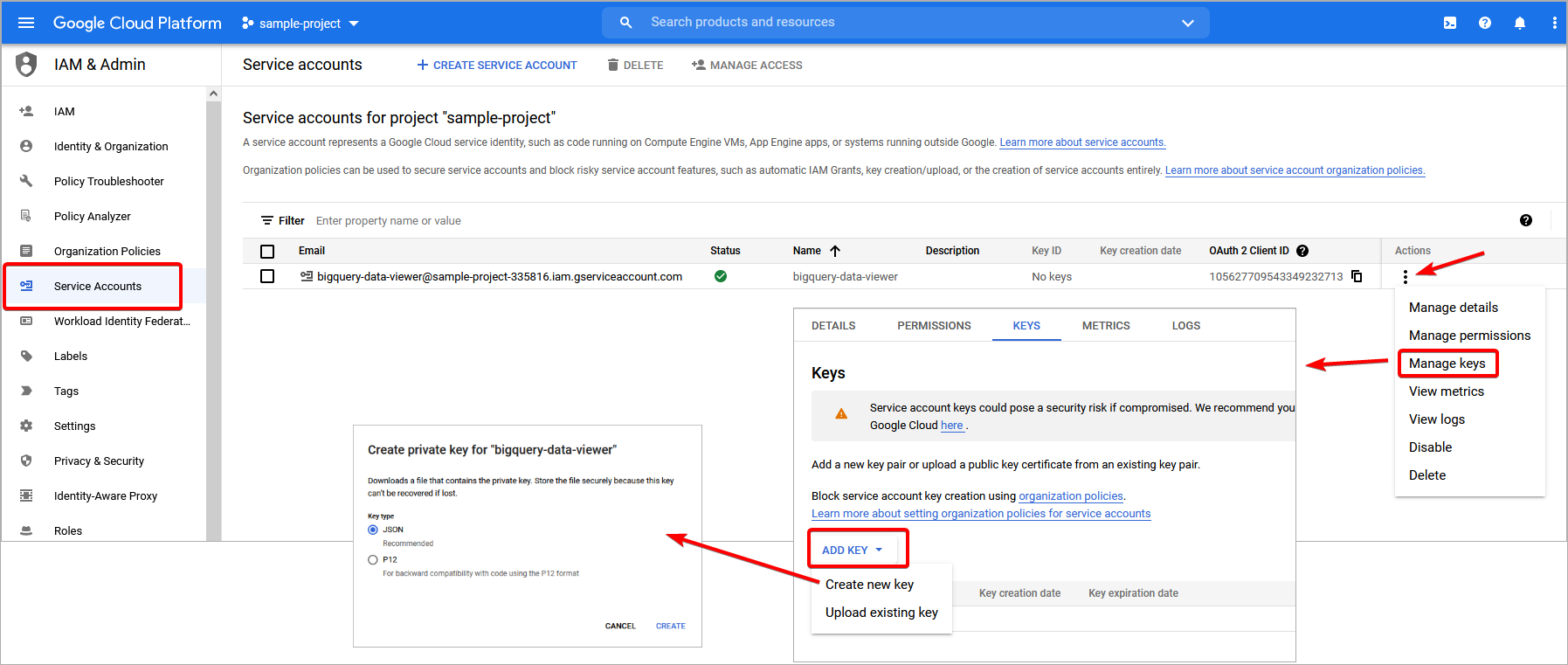

Service account key

To authenticate to Google BigQuery with a service account, you need a Service Account Key. To get one, you need to find the Service Account resource, open the Service Account that will be used to connect from Dataedo, go to the Keys tab and click Add Key -> Create new Key button. Then select JSON format and download the key.

Cloud Resource Manager API

In order to document the BigQuery database, Dataedo needs to access the Cloud Resource Manager API which needs to be enabled first. Most likely this feature will be enabled, however, if it is disabled, you can activate it by searching Cloud Resource Manager API in Google Marketplace and then clicking the Enable button.

Connecting to Google BigQuery

To connect to Google BigQuery, create new documentation by clicking Add documentation and choosing Database connection.

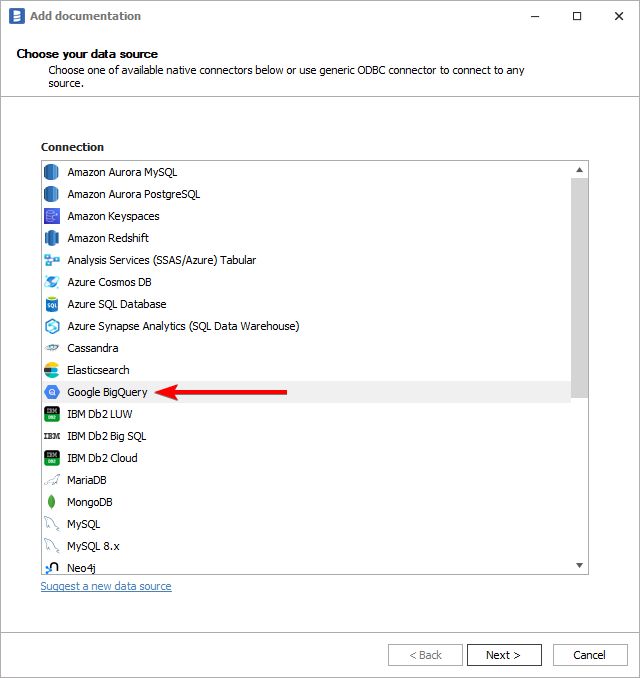

On the Add documentation window choose Google BigQuery:

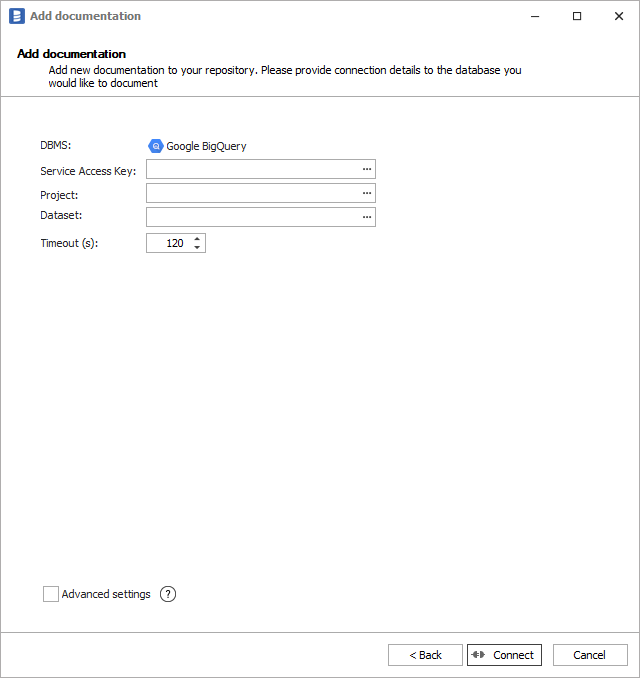

Provide database connection details:

-

Service Access Key - path to Service Account Key. You can open file explorer to browse for the file by clicking the [...] button,

-

Project - select the Google Cloud project under which your BigQuery database was created. You can expand the list of available projects by clicking the [...] button,

-

Dataset - select one or more datasets to document. You can expand the list of datasets by clicking the [...] button.

Saving password

You can save the password for later connections by checking the Save password option. Passwords are saved in the repository database.

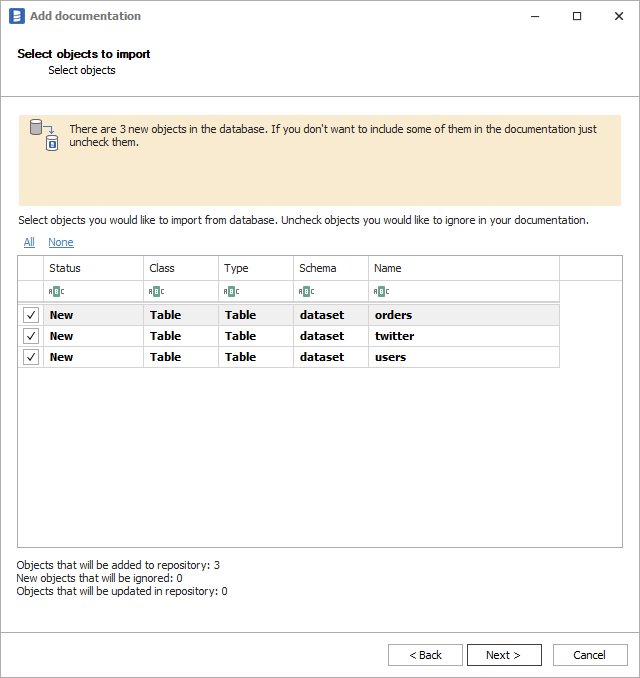

Importing schema

When the connection is successful, Dataedo will read objects and show a list of objects found. You can choose which objects to import. You can also use advanced filter to narrow down the list of objects.

Confirm the list of objects to import by clicking Next.

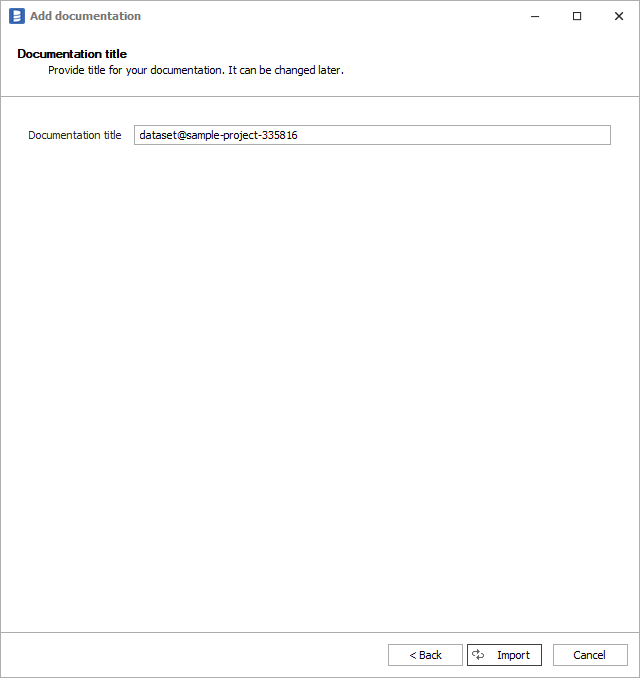

The next screen allows you to change the default name of the documentation under which it will be visible in the Dataedo repository.

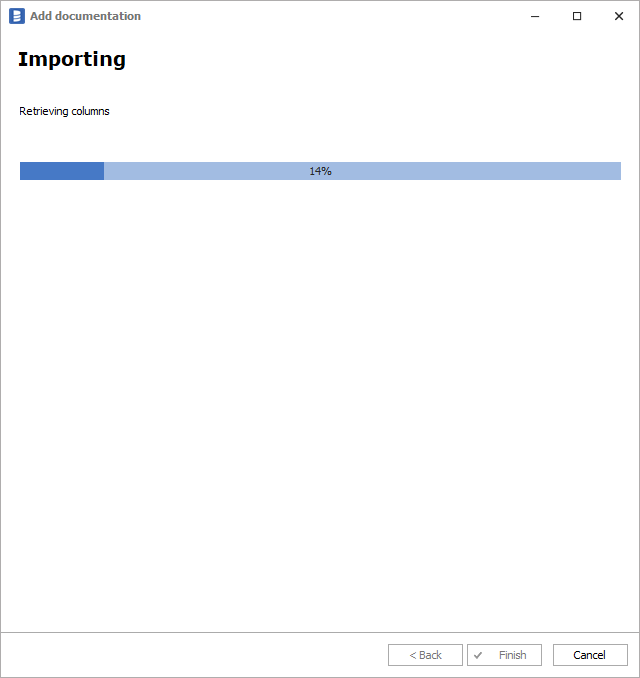

Click Import to start the import.

When done, close the import window with the Finish button.

Your database schema has been imported to new documentation in the repository.

Importing changes

To sync any changes in the schema in Google BigQuery and reimport any technical metadata, simply choose the Import changes option. You will be asked to connect to Google BigQuery again and changes will be synced from the source. Read more about importing schema changes.

Scheduling imports

You can also schedule metadata updates using command line files. Read more about scheduling imports.

Specification

Imported metadata

Dataedo reads the following metadata from Google BigQuery databases.

| Imported | Editable | |

|---|---|---|

| Tables, External tables | ✅ | ✅ |

| Columns | ✅ | ✅ |

| Primitive columns | ✅ | ✅ |

| Nested columns (Records) | ✅ | ✅ |

| Repeated columns | ✅ | ✅ |

| Data types with length | ✅ | ✅ |

| Nullability | ✅ | |

| Column comments | ✅ | ✅ |

| Table comments | ✅ | ✅ |

| Foreign keys | ✅ | |

| Primary keys | ✅ | |

| Unique keys | ✅ | |

| Views | ✅ | ✅ |

| Script | ✅ | ✅ |

| Columns | ✅ | ✅ |

| Primitive columns | ✅ | ✅ |

| Nested columns (Records) | ✅ | ✅ |

| Repeated columns | ✅ | ✅ |

| Data types with length | ✅ | ✅ |

| Nullability | ✅ | |

| Column comments | ✅ | ✅ |

| View comments | ✅ | ✅ |

| Stored procedures | ✅ | ✅ |

| Script | ✅ | ✅ |

| Parameters | ✅ | ✅ |

| Procedures comments | ✅ | ✅ |

| User-defined Functions | ✅ | ✅ |

| Script | ✅ | ✅ |

| Input arguments | ✅ | ✅ |

| Output results | ✅ | ✅ |

| Languages | ✅ | ✅ |

| Function comments | ✅ | ✅ |

| Shared metadata | ||

| Dependencies | ✅ | ✅ |

| Created time | ✅ | |

| Last updated time | ✅ |

Supported features

| Feature | Imported |

|---|---|

| Import comments | ✅ |

| Write comments back | |

| Data profiling | ✅ |

| Reference data (import lookups) | ✅ |

| Generating DDL | ✅ |

| FK relationship tester |

Comments

Dataedo reads comments from the following Google BigQuery objects:

| Object | Read | Write back |

|---|---|---|

| Table comments | ✅ | |

| Column comments | ✅ | |

| View comments | ✅ | |

| Columns | ✅ | |

| Function comments | ✅ | |

| Stored procedures | ✅ |

Data profiling

To perform profiling, Dataedo queries tables hence the service account used for import needs to have BigQuery Data Viewer role or other permissions that allow executing SQL SELECT statements.

Dataedo supports the following data profiling in Google BigQuery:

| Profile | Support |

|---|---|

| Table row count | ✅ |

| Table sample data | ✅ |

| Column distribution (unique, non-unique, null, empty values) | ✅ |

| Min, max values | ✅ |

| Average | ✅ |

| Variance | ✅ |

| Standard deviation | ✅ |

| Min-max span | ✅ |

| Number of distinct values | ✅ |

| Top 10/100/1000 values | ✅ |

| 10 random values | ✅ |

Read more about profiling in a Data Profiling documentation.

Known issues and limitations

The following schema elements currently are not supported:

- Labels

- Friendly names

- Table partitions

- Jobs

- User-defined temp Function